Video Encoding: Go for the Specialist or the Jack-of-All-Trades?

One of the hardest choices encoding technicians have to make is deciding between hardware and software. Hardware-based encoders and transcoders have had a performance advantage over software since computers were invented. That's because dedicated, limited-purpose processors are designed to run a specific algorithm, while the general-purpose processor that runs encoding software is designed to handle several functions. It's the specialist versus the jack-of-all-trades.

In the past few years, processors and workflows have changed. The great disruptor has been time and the economics of Moore's Law, which famously says that the number of transistors incorporated in a chip will approximately double every 24 months. The logical outcome of Moore's law is that the CPUs get more powerful by a factor of two every few years, but more recently processing power seems to double every few months. Lately, Intel -- whose co-founder Gordon Moore coined Moore's Law -- has been adding specialty functions along with its math co-processors to equalize the differences between general-use processors and specialty processors.

There are many layers and elements to both a general-purpose processor and a task-specific hardware processor. The general-purpose CPU is the most common -- there are literally billions of them in all manner of computing devices -- while the more purpose-oriented processors include digital signal processors (DSPs), field-programmable gate arrays (FPGAs), and integrated circuits (ICs) that are available for various industrial appliances and widely used in cellphones. Many of the structures and elements are similar across all types, but there are considerable differences. If you are not familiar with the elements of the various types, here are the basic structures of both.

The General-Purpose CPU

The general-purpose CPU is laid out with flexible core elements as the arithmetic logic unit (ALU), control unit (CU), and accessory elements that offer extra features for performance. Basically these two cores talk to each other, bring in memory as needed, and send work to the other elements. Other elements include I/O processors, logic gates, integrated circuits, and -- on most newer processors and especially on the Intel Xeon processors -- a beefy math co-processor. The math co-processor assists the ALU and can handle the more extreme and complex mathematical computations. Essentially, it gives the processor the extra horsepower it might require.

The Dedicated Processor

Specific-purpose hardware encoders have been around longer than general-purpose processors, and the latter have been slower at mathematical equations or algorithm problems. History is vitally important to understand the market and technology, not to mention get a sense of what the future holds. The earliest example of encoding was in 1965, when the Intelsat 1 (Early Bird) became the first commercial deployment of a satellite to downlink video and audio. Since then, the world has been using specific processors to process video, and the technology has made leaps and bounds to offer higher density and quality.

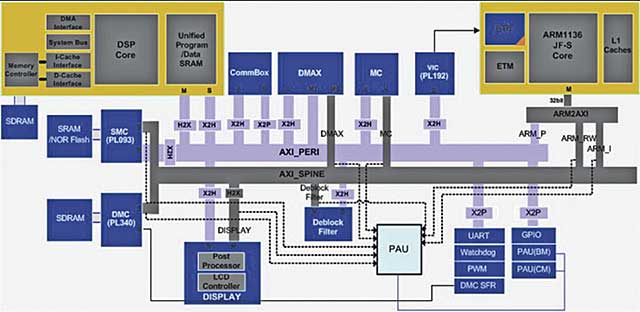

This is a general layout of a video ARM DSP. The ARM core runs the embedded operating system, working like a traffic cop to control input and output.

Dedicated processors -- such as dedicated signal processors (DSPs), graphics processing units (GPUs), and vector processors -- all have a very similar design structure. A basic and most common element is an I/O manager, which has a tiny onboard operating system along with memory. This is the traffic cop, controlling input and output. Then there are multiple specialized processing modules that execute the desired instructions very quickly and that DSPs and other dedicated processors support. Unlike a general-purpose processor, which has many possible general instructions that may not be most efficient for the task at hand, dedicated processors rely on accelerated, per-function instructions that are more job-specific.

Dedicated processors and encoders have a variety of applications and workflows. If you look at the major users of professional encoders, you will see that in many cases they rely on specialized encoders. The following well-known companies make DSP chips: LSI Corp.; Texas Instruments, Inc.; Analog Devices, Inc.; Sony; and Magnum Semiconductor. These DSPs are used in devices such as media gateways, telepresence devices, cellphones, and military and radar processing.

FPGAs are now very popular implementations of DSP functions because of their flexibility of setup and upgrade. Since they are field-upgradeable, their development costs for the user are significantly cheaper than DSPs and application-specific integrated circuits (ASICs; more on those in a moment) from the traditional DSP providers. You can see FGPAs from Altera Corp. and Xilinx, Inc. that have DSP functions built in. If you want a board or system that is easier to upgrade in the field, then this is probably the best way to go.

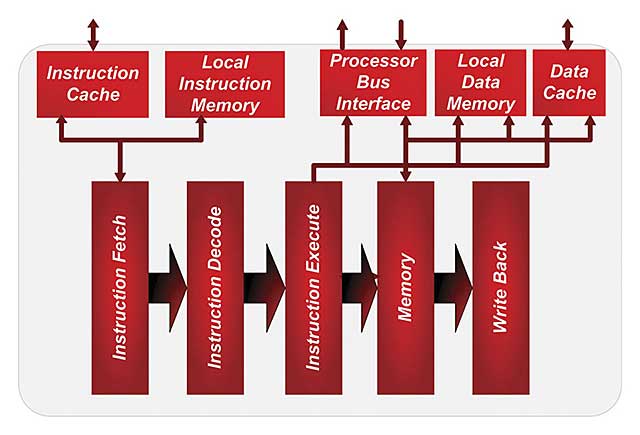

While each manufacturer will tweak its design slightly, this is an overview of how communications and packets flow into the modules of DSPs and ASICs.

Another implementation of the dedicated processor is the ASIC. These factory-programmed DSPs are used everywhere cost is a crucial consideration, because they offer special functionality at optimal price and performance. In general, they are more expensive to design but are cost-effective for any appliance or board manufacturer to implement into their systems. Many manufacturers of DSPs also manufacture ASICs; companies such as NXP Semiconductors, Broadcom Corp., and Freescale, Inc. also make custom ASIC DSPs.

If you ever open up a hardware encoding appliance -- from IP media gateways to broadcast encoder and decoders -- you will see several of the chips previously listed. You can find appliances for every industry. Today you will find a dedicated hardware-based encoding device from Harmonic, Inc.; Harris; Tandberg Data; or NTT Communications in every TV station or cable TV headend, and you'll find appliances or cards from ViewCast Corp. or Digital Rapids in many hybrid encoding farms, since they accelerate some of the functions in the hardware. If you've watched a video on YouTube, then you have seen video encoded by RGB Networks with the RipCode equipment, which used massive numbers of Texas Instruments, Inc. DSPs.

Pros and Cons of Hardware Encoders

There are always some pros and cons when it comes to specific design hardware encoders and dedicated appliances. The dedicated hardware approach with DSPs or chips is the perfect solution for media gateways and in low latency military applications. They are designed to run 24/7 with little or no human interaction. There are some processors that can encode an entire frame in the 1ms-10ms (millisecond) range and FPGAs that can encode in the 10ms-30ms range. These processes allow for the creation of appliances where the encoding latency is less than 100ms from encode to transmission to decode. Right now you can only get low latency using the right DSPs, ASICs, and FPGAs. The average lifespan of an appliance is 5-10 years depending on the configuration and manufacturer. Similar lifetimes are assumed for systems that rarely change, such as satellite uplink or cable system encoders.

The primary drawback of dedicated hardware-based encoding is that the codec on the processor is generally impossible to upgrade. Every DSP, ASIC, or FPGA is based on an algorithm that was finalized years ago. By the time the chip is ready to be sold, the codec is 6 months to a year old. Add more design cycles for the appliance development and manufacturing, and the end result is a device based on a codec that's a year or more old. If improvement to the codec comes out, the chip or device might never be able to integrate the new codec or technology due to the manufacturer or the way the chip was designed. The dedicated DSP approach can save a lot of money, but at the expense of flexibility. Those chips do just what they were originally designed to do and nothing more.

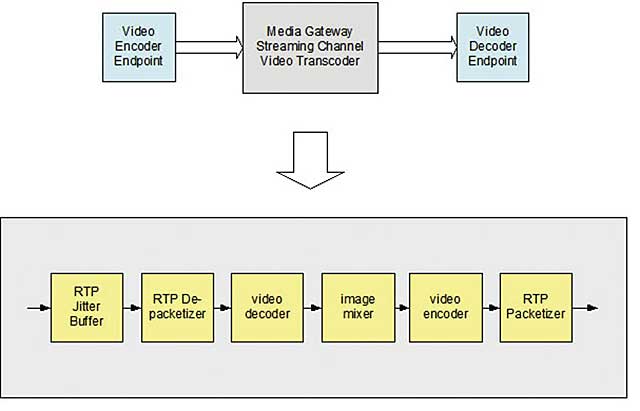

Video comes into a hardware encoder to a media gateway, which will make adjustments to the video stream to address network conditions and the end user’s video decoding device. When done, it will send these modifications to the video decoder.

There's another issue with dedicated chip-based encoders: Who determines the quality of the codes and streams? Is it a DSP engineer, a compressionist, or the producer and director? In a TV station, it's usually a combination of chief engineer and executive producer who decide what station image goes over the air. If they use a hardware encoder, in many cases decisions about encoding parameters have been taken out of their hands. The broadcast engineer has to work within the parameters the chip manufacturer has allowed end users to change, meaning that while there is usually some control, there may not be as much as a producer or engineer would like. There are only so many operations and cycles you can put on a chip, so some functionality is uneconomical to implement.

The Pros and Cons of Software Encoders

General-purpose CPUs share some similarities and architecture with dedicated processors. They are designed to handle the everyday functions of your PC or server, and they are optimized to do mundane tasks such as word processing. This is why your motherboard has a powerful graphics card in your machine; it's a specialty function that is best offloaded to a specific-designed processor. If you do any nonlinear video editing, you likely have a capture card with some specialty processors to give you real-time output or transitions.

In the encoding and streaming industry, we mostly use a capture board and one or more of many available software encoding packages. There are algorithms and formulas for every application, from live encoding to file-based transcoding to software-based decoding. These days most software-based encoders have hooks in the code to offload certain elements to accelerate or allow multiple CPUs to run parallel functions to get the best performance and quality. More recently, Intel is offering some onboard GPUs that feature decoding with MPEG, analysis of a video stream's motion vectors, and other functions.

This overview shows how a GPU or video accelerator is laid out. Again, one device works as a traffic cop to send work to the appropriate processors, then takes the video streams back and reassembles them together, allowing video to be encoded at a faster rate.

Software encoders have allowed users to be much more flexible in responding to the needs of specific customers or events, and they all use the same general-purpose processors and capture boards to support more video formats and standards. This has been an advantage for software encoders for a long time. They are easy to reconfigure and use.

The software encoding industry has recently seen battles between open source and closed source. There are some notable pioneering closed source companies that helped drive the development of software encoding and streaming: Microsoft; Real Networks, Inc.; Sorenson Communications; and Adobe Systems, Inc. laid out the framework for modern streaming and web-based video. They have been around since the beginning, and in many cases they financed the codecs that became standards.

In addition to these pioneering companies, there has been a recent movement to open source. Some of the earlier versions such as x264 and the open source library in the University of California-Berkeley provide the foundation for most software encoders. Code is added every so often and allows others to program their custom apps. The better-known ones such as VideoLan (VLC), FFmpeg, and WebM are creating new versions and are catching on in general use. Some are even getting funding from some of the larger public companies. The most notable example is WebM, which is being funded mostly by Google, which made the VP8 codec open source after it acquired On2 Technologies. All this competition and activity is creating better products for consumers. The big companies realize open source development and innovation is faster-moving than their own, allowing the market to grow more quickly than it otherwise might.

But software-based encoding has some drawbacks. The most important parameters of encoding are quality, flexibility, price, latency, and support.

Software encoding's greatest advantages over pure hardware encoders are its flexibility and quality. Software has always been able to adopt and update incredibly fast. When new codec optimizations come out, encoding package updates follow very soon after.

Software encoding can enable the producer, engineer, or other user to get precisely the quality and image that they want, unlike the automated hardware solution, where the user has no say in what the overall image is and outcome will be. Some larger encoding firms hire color consultants and compressionists, along with programmers and delivery experts, all of whom help the executive producers and directors determine what the overall outcome should look like. It's a broadcast approach for streaming.

Later this year or in early 2013, Intel will release its Xeon Phi series of massive parallel coprocessors, which will work with existing Xeon processors and workflows.

So if software encoding wins in flexibility and quality, what about speed or latency? While some highly tuned hardware encoders offer a latency down in the 30ms range, most software solutions run in the 300ms-500ms range, if not higher. Most people who use software encoding realize they are sacrificing some speed for quality. All that matters is whether or not they can get the resolution and framerate they want; if it's delayed some, the workflow can be designed to accommodate it. On the other hand, if you require the lowest latency and fastest delivery, you will have to give up some quality.

Cost of support is of course an important issue. Will the proprietary company keep making the version you're using, or is there a chance it will be withdrawn from the market? How much will the updates and upgrades cost? It turns out that upgrades in the open source community are relatively frequent, whereas upgrades in propriety software are less so.

While some people assume that open source products offer lower quality or reliability than proprietary software, that's not necessarily the case. FFmpeg, VLC, and WebM are all significantly upping their quality. On the other hand, proprietary software packages such as Sorenson and MainConcept have also stood the test of time and continue to find widespread use. Interestingly, MainConcept and Sorenson are two of the few companies whose solutions are used in both software and hardware encoding; both provide codecs for the PC environment as well as specifically designed chips.

Changes in Media Consumption, Changes in Media Encoding

General-purpose hardware-based decoders are now playing an important role in the overall media viewer world, especially as more and more viewers are quitting cable and going the IP route for all of their video consumption. Roku, Boxee, and other IP set-top boxes are DSP-based decoders. At the same time, more and more consumers are adopting Android or iOS devices and using them as personal media players, and each device brings with it its own set of ideal encoding profiles and parameters. You'll find you need to do custom scaling and probably want to offer the highest possible complexity. Then again, you need to spend more CPU cycles per frame, which will require more encoding time but create a better outcome.

Conclusion

There will always be a battle between hardware encoding and software encoding. Who will win in various market segments? Why a hardware encoder versus the software encoder? Even now we are starting to see more specialty functionality appended on the general-purpose CPUs, due to the miniaturization and density of transistors and processors. For instance, Intel recently agreed to buy 190 patents and 170 patent applications from RealNetworks, and for years the company has been adding graphics processing and other accelerators or processing engines.

Dedicated hardware encoding wins in unique parallel processing situations when massive amounts of data need to be processed, as well as in low latency communications such as real-time financial and some military applications. It also leads in situations where you want to just install the encoding tool and let it do its thing, such as in situations with YouTube that can rely on automated, predefined resolutions and bitrates for a massive amount of viewers. But software encoders will be the tool of choice in most applications. It's faster and cheaper to encode with software than in hardware, and once you see how the market responds to your output, it's faster and cheaper to make modifications.

So, do you most value flexibility and lower costs? Then software is probably your best bet. Do you need low latency and stream density or automated auto-transcoding for the mobile market? Then a hardware solution probably is best for you.

This article appears in the October/November, 2012, issue of Streaming Media magazine under the title "The Specialist Vs. the Jack-of-All-Trades."

Related Articles

Premium sits between existing Squeeze Desktop and Server products, and is meant for use by multiple video editors.

12 Sep 2012

Hardware? Software? A workflow system? What are the advantages to each? For those not sure where to start, look here first.

11 Sep 2012

Will the major Hollywood studios warm up to cloud encoding? Encoding.com plans to disrupt the market.

06 Sep 2012

Companies and Suppliers Mentioned