Closed Captioning for Streaming Media

Large web-only broadcasters should also be concerned about a recent ruling in the National Association of the Deaf’s (NAD) lawsuit against Netflix, in which NAD asserted that the Americans with Disabilities Act imposed an obligation for Netflix to caption its “watch instantly” site. In rejecting Netflix’s motion to dismiss, the court ruled that the internet is a “place of public accommodation” and therefore is subject to the act. Netflix later settled the lawsuit, agreeing to caption all of its content over a 3-year schedule and paying around $800,000 for NAD’s legal fees and other costs. In the blog post referenced previously, the attorney stated: “[P]roviding captioning earlier, rather than later, should be a goal of any video programming owner since it will likely be a delivery requirement of most distributors and, in any event, may serve to avoid potential ADA claims.” This sounds like good advice for any significant publisher of web-based video.

Voluntary Captioners

Beyond those who must caption, there is a growing group of organizations that caption for the benefit of their hearing-impaired viewers, to help monetize their video, or both. One company big into caption-related monetization is Boston-based RAMP. I spoke briefly with Nate Treloar, the company’s VP of strategic partnerships.

In a nutshell, Treloar related that captions provide metadata that can feed publishing processes that enhance search engine optimization (SEO), such as topic pages and microsites, that are impossible without captions. RAMP was originally created to produce such metadata for its clients, and it only branched into captioning when it became clear that many of its TV clients, which include CNBC, FOX Business, Comcast SportsNet, and the Golf Channel, would soon have to have captioning on the web.

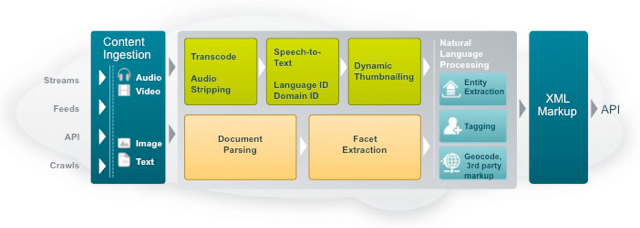

Figure 3. RAMP’s cloud-based captioning and metadata creation workflow

As shown in Figure 3, RAMP’s technology can input closed captions from text or convert them from audio, with patented algorithms that prioritize accuracy for the nouns that drive most text searches. This content is processed and converted into timecoded transcripts and tags for use in SEO with dynamic thumbnailing for applications like video search. The transcripts can then be edited for use in web captioning.

RAMP’s prices depend upon volume commitment and the precise services performed, but Treloar stated that video processing ranges from pennies to a several dollars per minute. With customers such as FOX News reporting a 129% growth in SEO traffic, the investment seems worth it for sites where video comprises a substantial bulk of their overall content.

Now that we know who’s captioning for the web, let’s explore how it’s done.

Creating Closed Captions for Streaming Video

There are two elements to creating captions: The first involves creating the text itself; the second involves matching the text to the video. Before we get into the specifics of both, let’s review how captions work for broadcast TV.

In the U.S., TV captioning became required under the Television Decoder Circuitry Act of 1990, which prompted the Electronics Industry Association to create EIA-608, which is now called CEA-608 for the Consumer Electronics Association that succeeded the EIA. Briefly, Section 608 captions are limited to 60 characters per second, with one Latin-based character set that can only be used for a limited set of languages. These tracks are embedded into the line 21 data area of the analog broadcast (also called the vertical blanking interval), so they are retrieved and decoded along with the audio/video content.

Where CEA-608 is for analog broadcasts, CEA-708 (formerly EIA-708) is for digital broadcasts. The CEA-708 specification is much more flexible and can support Unicode characters with multiple fonts, sizes, colors, and styles. CEA-708 captions are embedded as a text track into the transport stream carrying the video, which is typically MPEG-2 or MPEG-4.

A couple of key points here. First, if you’re converting analog or digital TV broadcasts, it’s likely that the text track is already contained therein, so the caption creation task is done. Most enterprise encoding tools can pass through that text track when transcoding video for distribution to OTT or other devices that can recognize and play embedded text tracks.

Unfortunately, though QuickTime and iOS players can recognize and play these embedded text tracks, other formats, such as Flash, Smooth Streaming, and HTML5, can’t. So to create captioning for these other formats, you’ll need to extract the caption file from the broadcast feed and format it in a number of caption-based formats that are discussed in more detail later. Not all enterprise encoders can do this today, though it’s a critical feature that most products should support in the near future.

Captioning Your Live Event

If you’re not starting with broadcast input, you’ll have to create the captions yourself. For live events, Step 1 is to find a professional captioning company such as CaptionMax, which has provided captioning services for live and on-demand presentations since 1993. I spoke with COO Gerald Freda, who described this live event workflow.

With CaptionMax, you contract for a live stenographer (aka real-time captioner) who is typically off-site and who receives an audio feed via telephone or streaming connection. The steno machine has 22 keys representing phonetic parts of words and phrases, rather than 60-plus keys on a typical computer keyboard. The input feeds through software, which converts it to text. This text is typically sent to an IP address in real time, where it’s formatted as necessary for the broadcast and transmitted in real time. The text is linked programmatically with the video player and presented either in a sidecar window or, preferably, atop the streaming video just like TV.

Unlike captions for on-demand videos, there’s no attempt to synchronize the text with the spoken word—the text is presented as soon as available. If you’ve ever watched captioning of a live broadcast, you’ll notice that this is how it’s done on television, and there’s usually a lag of 2–4 seconds between the spoken word and the caption.

According to Phil McLaughlin, president of EEG Enterprises, Inc., seemingly small variations in how streaming text is presented could impact whether the captioning meets the requirements of the various statutes that dictate its use. By way of background, EEG was one of the original manufacturers of “encoders” that multiplex analog and digital broadcasts with the closed caption text; it currently manufactures a range of web and broadcast-related caption products. The company also has a web-based service for captioning live events.

Here are some of the potential issues McLaughlin was referring to. By way of background, note that language in the hearings related to the FCC regulations that mandated captioning for TV broadcasters discussed providing a “captioning experience … equivalent to … [the] television captioning experience.” McLaughlin doubts that presenting the captions in a sidecar meets this equivalency requirement because the viewer has to shift between the sidecar and video, which is much harder than watching the text over the video. At NAB 2012, McLaughlin’s company demonstrated how to deliver live captions over the Flash Player using industry standard components, so sidecars may be history by the time broadcasters have to caption live events in March 2013.

Related Articles

The introduction of caption transmuxing simplifies deployment by minimizing the number of source files required, though the process is slightly different for live vs. on-demand

27 Nov 2012

Companies and Suppliers Mentioned