'Performance Everywhere' Optimization of Your Streaming Solution for Ultimate QoE

What can seriously dent the reputation of a previously unbeaten streaming service?

Each new feature of a video streaming solution has added points to the comfort-watching sessions and sheer joy of interacting with the app.

But why is the churn rate higher than average? Despite an array of personalization features available and the UI experience all viewers could only dream of, a streaming service provider was gradually losing their subscribers. What’s become the root of the problem?

…A family gathered together for daily series watching. All are agog to know what’ll happen in the next episode, but exactly during a tense drama scene, a video player freezes and crashes in the end.

At this very time, a group of friends failed to even switch on the movie wanted. Although it was instantly shown on top of the extensive library collection, an unexpected error occurred after clicking on the preview.

What united these two cohorts, except for the ruined evening? Both became the victims of performance issues with their favorite streaming service.

The trickiest part about the app performance is that you have a low margin for error, as even a single fault, if not fixed on time, is likely to cause the churn of your streaming service subscribers. The task becomes more challenging when trying to reach users across multiple devices, as the more of them you intend to cover to grow a wider audience, the more hidden hazards with performance might occur, both general and platform-specific.

To find and neutralize performance issues before they cost you end users, you should first carefully run checks on major performance vulnerabilities and bottlenecks.

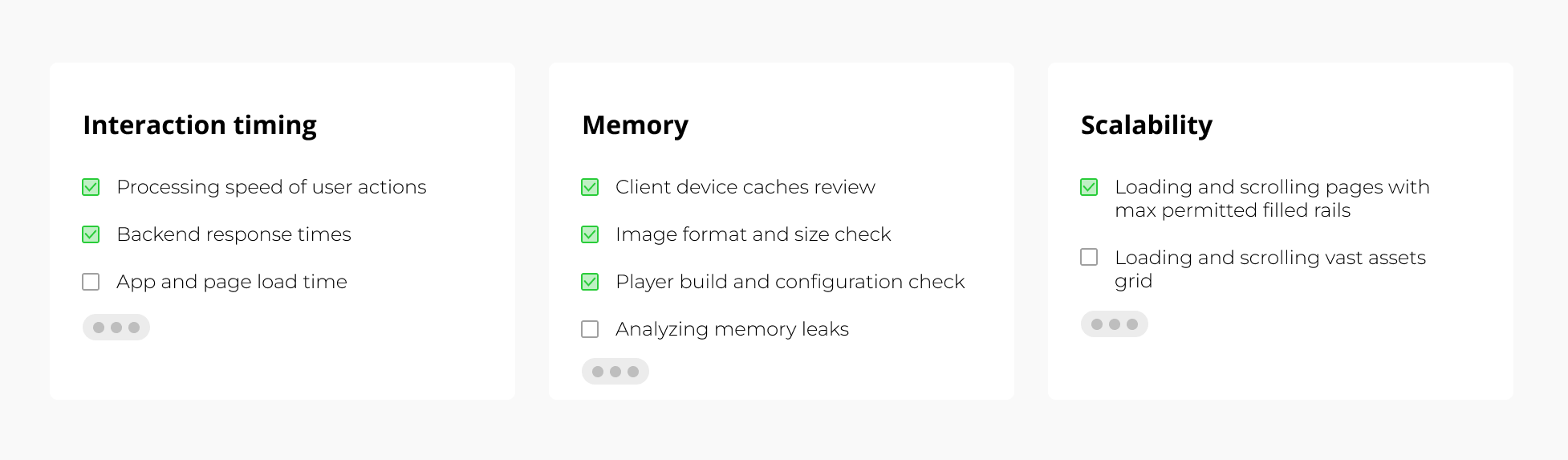

Let’s go over a step-by-step checklist of crucial performance tasks to proceed and provide some explanations to make it even more vivid.

Click the image to see it at full size

Areas for performance benchmarking to explore

Right from the get-go, a performance engineering team either gets the list of response time KPIs from a customer or generates the expected figures based on their expertise. That’s where the discussions on the metrics choice occasionally take place between a client and the team – to rely on accurate parameters while making all checks.

Step 1. Measuring interaction timing

The next stage is tracking the end-to-end timing of user actions – from backend response times (Time to First Byte or TTFB) to loading time of grids with assets – to indicate and prioritize performance issues, if any.

Every user action needs to be measured according to the established best practices for each client device that needs to be covered, including web, mobile, Smart TVs, and set-top boxes, – the 75th percentile value. For instance, after making four measurements, we refer to the number that fits the results of 3 out of 4 values received.

75th percentile measurements (click the image to see it at full size)

In some cases, like a Start Playback action, additional drill-down analysis might be required to get a true picture, therefore we can produce 8 or more measurements.

Just to recap: taking the maximum or average value as a starting point for performance optimization is incorrect. What should be the endpoint of the measurement and the expected timing KPIs? As a rule, we’re sharing our vision with the customer so that we can agree on the suggested criteria.

Step 2. Checking memory consumption

The most hitting-the-pocket resource dictates its delicate usage, especially when it comes to platforms like Smart TVs or Android set-top boxes that have rigid limitations. Provided the memory is used too intensively, stream freezing won’t keep you waiting long.

Memory check results (click the image to see it at full size)

When we’re talking about memory optimization, the advanced techniques come in place and the count goes on megabytes. All because we don’t have a chance to upgrade hardware in case of the particular TV and set-top box models, which makes saving MBs of memory a true achievement.

“Caching helps to improve the app performance” is considered one of the wide-spread performance best practices. Client devices are an exception to the rule, though. Providing caching to television or set-top-box clients might give an opposite effect, affecting memory and causing app latency issues.

In this case, data fetching from backend and storing only the relative set of data comes in handy: an app fetches as many objects as it needs to avoid the excessive memory pressure by unused or rarely used data.

Step 3. Detecting memory leaks for various use cases

On top of analyzing memory potential and formulating possible ways of its optimization across multiple devices, performance teams keep an eye on memory leakage due to the incorrect management of memory allocations, and the reasons why they occur.

Some of the scenarios to take a closer look at and make sure the target device memory is sufficient for them are active EPG interaction, active app pages switching or grids scrolling, and continuous app usage, from 12 up to 24 hours if possible.

Finding a memory leak is a troublesome business, sometimes taking even more than 12 hours. For this challenging search operation, overnight tests can be a good way out. Uninterrupted TV or set-top box functioning is enabled and thus potential slow-ups are tracked.

Step 4. Examining app scalability potential content-wise

Huge data volumes engender a number of scalability problems, which might lead to a limited app’s responsiveness or even crashing. This causes the necessity of separate tests for content collections, as dealing with 100 elements with ease doesn’t mean that 1000 elements in the category won’t affect the performance of the app.

But where to find a significant amount of test data to perform volume testing and make sure the app handles the data without crashing? Hunting large data volumes becomes the main challenge during scalability checks.

For accurate performance testing, we can fill in this gap either by utilizing backend production data (if the system has been live for a while) or generating synthetic test data when the release is yet to come.

Scalability checks (click the image to see it at full size)

Does the ultimate viewing experience of a streaming service lie solely at the app level?

If we were isolated from the other components of a video streaming service, the story of frontend enhancements would go faster and smoother. But in the real world, all dependencies and limitations may impact a frontend app’s performance.

Forewarned, forearmed: the list of implicit pitfalls accompanied by some of the lifehacks to avoid them by the means of frontend is below.

Not by frontend alone

Third-party libraries, external plugins, video players, even a platform powering a video streaming service can be the root of performance issues. The questions arise when tech specialists do not have access to this code. How do you overcome performance challenges in this case?

If your team can’t reach root-cause areas, banking on the holistic view to an issue is the best way to approach it. Communication across the teams responsible for all links in the chain, including backend, apps, integration layers, and players is vital.

When cross-communication is complicated, you are left with doing your best to streamline the performance of that part you take full control over.

CDN response time (click the image to see it at full size)

Device heterogeneity requires a multi-step performance optimization approach

During performance scope planning, one should address the specific requirements of each platform forming a part of a video streaming offering. Tizen, webOS, tvOS, Android and Android TV, iOS, Roku TV – all have versions, which entails different memory and hardware characteristics. While going in for timing measurement, calculations are essential for each platform independently as optimization techniques are most likely to differ.

When you’re dealing with a bunch of devices, like many of media and entertainment clients may have, and going to launch the app on about a dozen of platforms including TVs, web, mobile, and set-top boxes, be ready for shifting the dates in your release calendar. To minimize the delays, we recommend unraveling a tangle step by step. First, center around the independent layer optimization. Platform-specific optimization activities go next.

OS and hardware make it hard to move ahead: do profound research for target devices

As mentioned above, the more platforms are entering a streaming service ecosystem, the more constraints they impose on performance engineers. Therefore, a deep functional test of a client’s business requirements for validating the smooth app launch and their usability across all the devices requested is a must. It will help to reveal how justified it is to confide in them for excellent service.

A good practice to do before optimizing frontend is to investigate each device version for compliance with planned app functionality and expectations of its smooth operation, then generate a performance recommendation list.

Please note that as far as the performance testing process goes, hardware emulators don’t guarantee accurate results, thus compromising their value at all. If there’s a need to test, let’s say, a couple of webOS generations, a physical device for each version is required, as a bigger portion of performance issues turns out to be device-specific.

Alexander Skamarokha is the Head of the Performance Optimization Team at Oxagile.

Related Articles

Many brands maintain a YouTube channel, but barely skim the surface of what the platform can do. Here's a step-by-step guide to optimizing a corporate YouTube account.

06 Sep 2019

Per-title encoding is on the way out as Brightcove and others demonstrate the value of a more holistic approach. Streaming Media's Jan Ozer interviews Brightcove's Yuriy Reznic at NAB 2019.

26 Apr 2019

See you later, per-title encoding. There's a new system in town and it's called context-aware encoding. Learn which companies unveiled CAE solutions at NAB, as well as other streaming breakthroughs.

15 Apr 2019