What Is a Codec?

This is an installment in our ongoing series of "What Is...?" articles, designed to offer definitions, history, and context around significant terms and issues in the online video industry.

Executive Summary

Codecs are the oxygen of the streaming media market; no codecs, no streaming media. From shooting video to editing to encoding our streaming media files for delivery, codecs are involved every step of the way. Many video producers also touch the DVD-ROM and Blu-ray markets, as well as broadcast, and codecs play a role there as well.

Though you probably know what a codec is, do you really know codecs? Certainly not as well as you will after reading this article. First we’ll cover the basics regarding how codecs work, then we’ll examine the different roles performed by various codecs. Next we’ll examine how H.264 became the most widely used video codec today, and finish with a quick discussion of audio codecs.

Codec Basics

Codecs are compression technologies and have two components, an encoder to compress the files, and a decoder to decompress. There are codecs for data (PKZIP), still images (JPEG, GIF, PNG), audio (MP3, AAC) and video (Cinepak, MPEG-2, H.264, VP8).

There are two kinds of codecs; lossless, and lossy. Lossless codecs, like PKZIP or PNG, reproduce the same exact file as the original upon decompression. There are some lossless video codecs, including the Apple Animation codec and Lagarith codec, but these can’t compress video to data rates low enough for streaming.

In contrast to lossless codecs, lossy codecs produce a facsimile of the original file upon decompression, but not the original file. Lossy codecs have one immutable trade-off–the lower the data rate, the less the decompressed file looks (or sounds) like the original. In other words, the more you compress, the more quality you lose.

Lossy compression technologies use two types of compression, intra-frame and inter-frame compression. Intra-frame compression is essentially still image compression applied to video, with each frame compressed without reference to any other. For example, Motion-JPEG uses only intra-frame compression, encoding each frame as a separate JPEG image. The DV codec also uses solely intra-frame compression, as does DVCPRO-HD, which essentially divides each HD frame into four SD DV blocks, all encoded solely via intra-frame compression.

In contrast, inter-frame compression uses redundancies between frames to compress video. For example, in a talking head scenario, much of the background remains static. Inter-frame techniques store the static background information once, then store only the changed information in subsequent frames. Inter-frame compression is much more efficient than inter-frame compression, so most codecs are optimized to search for and leverage redundant information between frames.

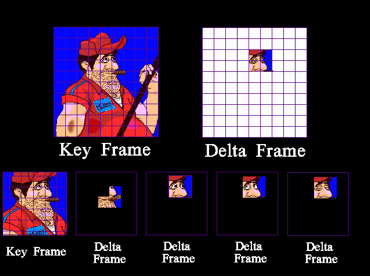

Early CD-ROM based codecs like Cinepak and Indeo used two types of frames for this operation: key frames and delta frames. Key frames stored the complete frame and were compressed only with intra-frame compression. During encoding, the pixels in delta frames were compared to pixels in previous frames, and redundant information was removed. The remaining data in each delta frame is also compressed using intra-frame techniques as necessary to meet the target data rate of the file.

Figure 1. Key frames and delta frames as deployed by CD-ROM based codecs.

This is shown in Figure 1, which is a talking head video of the painter shown on the upper left. During the video, the only regions in the frame that change are the mouth, cigar, and eyes. The four delta frames store only the blocks of pixels that have changed and refer back to the key frame during decompression for the redundant information.

In this scenario with an animated file, inter-frame compression would be lossless, because you could recreate the original animation bit for bit with information stored in the key and delta frames. While operation isn’t lossless with real-world video, it’s very efficient, which explains why talking head videos encode at much higher quality than soccer matches or NASCAR races.

Long GOP Formats

Since the CD-ROM days, inter-frame techniques have advanced, and most codecs, including MPEG-2, H.264 and VC-1, now use three frame types for compression: I-frames, B-frames, and P-frames, as shown in Figure 2. I-frames are the same as key frames, and are compressed solely with intra-frame techniques, making them the largest, and least efficient frame type.

Figure 2. I-, B- and P-frames as used in most advanced compression technologies.

B-frames and P-frames are both delta frames. P-frames are the simplest, and can utilize redundant information in any previous I- or P-frame. B-frames are more complex, and can utilize redundant information in any previous or subsequent I-, B- or P-frame. This makes B-frames the most efficient of the three frame types.

These multiple frame types are stored in a group of pictures, or GOP, that starts with each I-frame and includes all frames up to but not including the subsequent I-frame. Codecs that use all three frame types are often called “long GOP formats,” primarily when the codecs are used in non-linear editing systems. This highlights the second fundamental trade-off of lossy compression technologies: quality for decode complexity. That is, the more quality the codec delivers, the harder it is to decode, particularly in interactive applications like video editing.

The first long-GOP format used in non-linear editing systems was HDV, an MPEG-2 based format, and imagine the complexity this introduced. For example, with DV and Motion-JPEG, each frame was completely self-referential, so you could drag the editing playhead to any frame in the video, and it could decompress in real time.

However, with the MPEG-2 based HDV, if you dragged a playhead to a B-frame, the non-linear editor would have to decompress all frames referenced by that B-frame, and those frames could be located before or after that B-frame in the timeline. On the underpowered computer systems of the day, most working with 32-bit operating systems that could address only 2GB of memory, long-GOP formats caused significant latency which made editing unresponsive.

As camcorders increasingly came to rely on long GOP formats like MPEG-2 and H.264 to store their data, a new type of codec, most often called an intermediate codec, arrived on the scene. These include Cineform, Inc.’s Cineform, Apple ProRes, and Avid DNxHD. These codecs use solely intra-frame compression techniques for maximum editing responsiveness, and very high data rates for quality retention.

Function-Specific Codecs

These intermediate codecs highlight the fact that while there is some crossover, it’s useful to identify codecs by their function, which include the following classes:

Acquisition codecs used in camcorders

These includes Motion-JPEG used in DV and DVCPROHD, MPEG-2 as used in Sony’s XDCAM HD and HDV, and H.264 as used in AVCHD, and many digital SLR cameras. The role of the codec here is to capture at as high a quality as possible while meeting the data rate requirements of the on-board storage mechanism.

Intermediate codecs, as identified above, used primarily during editing

As mentioned, in this role, these codecs are designed to optimize editing responsiveness and quality.

Delivery codecs

These include MPEG-2 for DVD, broadcast and satellite, MPEG-2, VC-1 and H.264 for Blu-ray, and H.264, VP6, WMV, WebM and multiple other formats for streaming delivery. In this role, the codecs must match the data rate mandated by the delivery platform, which in the case of streaming, is very much below the rates used for acquisition.

Codecs and Container Formats

It’s important to distinguish codecs from container formats, though sometimes they share the same name. Briefly, container formats, or wrappers, are file formats that can contain specific types of data, including audio, video, closed captioning text, and associated metadata. Though there are some general-purpose container formats, like QuickTime, most container formats target one aspect of the production and distribution pipeline, like MXF for file-based capture on a camcorder, and FLV and WebM for streaming Flash and WebM content.

In some instances, container formats have a single or predominant codec, like Windows Media Video and the WMV container format. However, most container formats can input multiple codecs. QuickTime has perhaps the broadest use, with some camcorders capturing MPEG-2/H.264 video in the QuickTime container format, and lots of videos distributed on iTunes with an MOV extension.

One area of potential confusion relates to MPEG-4, which is both a container format (MPEG-4 part 1) and a codec (MPEG-4 part 2). Technically, at least from the ISO side of things, H.264 is also an MPEG-4 codec (MPEG-4 part 10), which has largely supplanted use of the MPEG-4 codec. As a container format, an MP4 file can contain video encoded using the MPEG-2, MPEG-4, VC-1, H.263 and H.264 codecs.

Encode your MP4 file with VC-1, however, and neither the QuickTime Player or any iOS device will be able to play the file. In this regard, when producing a file for distribution, it’s critical to choose a codec and container format that’s compatible with the playback capabilities of your target viewers.

Most Roads Lead to H.264

Historically, video codecs have evolved on multiple separate paths, and it’s interesting that most of those paths led to H.264, which is why the codec has so much momentum today. One path was through the International Standards Organization, whose standards impact the photography, computer and consumer electronics markets. The ISO published its first video standard in 1993, which was MPEG-1, following with MPEG-2 in 1994, MPEG-4 in 1999, and AVC/H.264 in 2002.

The next path was via the International Telecommunications Union, which is the leading United Nations agency for information and communication technology issues, and contributes to standards in the telephone, radio and television markets. The ITU debuted their first video-conferencing related standard, H.120, in 1984, with H.261 coming in 1990, H.262 in 1994, H.263 in 1995 and H.264, which was jointly developed with the ISO, in 2002.

As we’ve seen, digital camcorders originally used the DV codec, and then transitioned over to MPEG-2, which continues to have significant share. AVCHD is an H.264-based format, and prosumer camcorders using this format are growing in popularity, as are camcorders using the H.264-based AVC-Intra format. H.264 completely owns the market for Digital SLR cameras like the Canon 7D, where virtually all camcorders use H.264.

On the delivery side, while most cable TV broadcasts are still MPEG-2-based, H.264 is gaining momentum in CATV and is widely used in satellite broadcasting. The streaming markets were first dominated by proprietary codecs, initially RealNetwork’s RealVideo, then Microsoft’s Windows Media Video, then On2’s VP6, with Sorenson Video 3 the primary QuickTime codec. In 2002, Apple’s QuickTime 6 debuted MPEG-4 support, with H.264 added to QuickTime 7 in 2005. In the same year, the first video-capable iPod shipped, also with H.264 (and MPEG-4) support. In 2007, Adobe added H.264 support to Flash, and Microsoft Silverlight support followed in 2008.

The only market that H.264 doesn’t dominate is the intermediate codec market, which is not appropriate for long GOP formats. Otherwise, almost every other market, from iPods to satellite TV, is primarily driven by the H.264 codec.

Audio Codecs

Finally, since most video is also captured with audio, the audio component must also be addressed. The most widely used audio format for acquisition and editing is PCM, which stands for Pulse-code Modulation, which is usually stored in either WAV or AVI format on Windows, or AIFF or MOV on the Mac. PCM is considered uncompressed, so it may be more properly characterized as a file format, rather than a codec. To preserve quality, most intermediate codecs simply pass through the uncompressed audio as delivered by the camcorder.

Most delivery formats have an associated lossy audio codec, like MPEG audio and AC-3 Dolby Digital compression on DVDs. Most early streaming technologies, like RealVideo and Windows Media, had proprietary audio components, so RealAudio accompanied RealVideo files, as did Windows Media Audio with Windows Media Video.

This dynamic changed most prominently when Adobe paired the VP6 codec with the MP3 audio codec for Flash distribution. The standards-based audio codec for H.264 video is the Advanced Audio Coding (AAC) codec, while WebM pairs the VP8 codec with the open-source Vorbis codec.

Why Codecs Matter to You

All stages in online video production and distribution involve codecs, and it’s important to understand their operation and their respective roles.

Related Articles

Scheduled to be the first codec released by the Alliance for Open Media, AV1 is positioned to replace VP9 and compete with HEVC. While we don't know many details yet, the backing of the Alliance should give AV1 a significant competitive advantage.

03 Jun 2016

The leading video calling service chooses VP8, but will new owner Microsoft agree?

04 Aug 2011

The announcement that many saw coming is now official: YouTube is transcoding to WebM, but still supporting H.264.

21 Apr 2011

An overview of the basics of encoding and transcoding, including an attempt to settle on some hitherto controversial definitions

20 Apr 2011

A look behind H.264, the world's most popular video codec, including encoding parameters and royalty concerns

04 Apr 2011

Got questions about transcoding for online streaming? A panel of insiders came together to teach the basics.

25 Mar 2011